Linear Regression

m: number of samples

alpha: learning rate

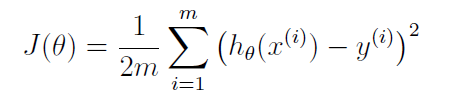

- Cost function: ()

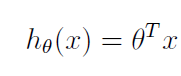

- where as:

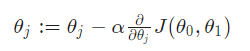

- Gradient descent:

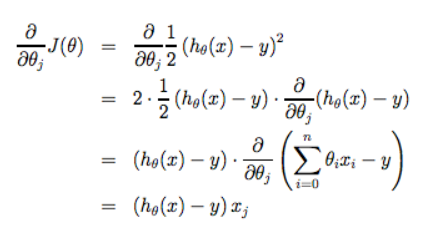

- In this case:

- In this case:

- Code for Vectorisation:

- Cost Function:

J=sum((X*theta-y).^2)/(2*m); - Gradient Descent:

for iter = 1:num_iters h=X*theta; theta=theta-alpha/m*X'*(h-y); end

- Cost Function:

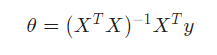

Another solution for linear regression:

-

Normal Equations

-

Code:

theta=pinv(X'*X)*X'*y;