Logistic Regression

m: number of samples lambda: Regularisation factor

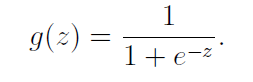

- Sigmoid function

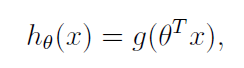

g=1./(1+exp(-z)); - hypothesis function

h=sigmoid(X*theta); -

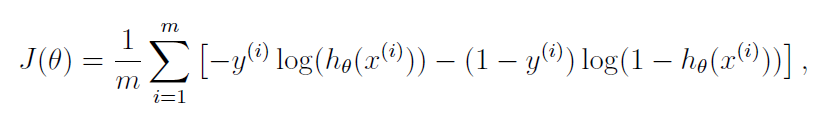

Cost function

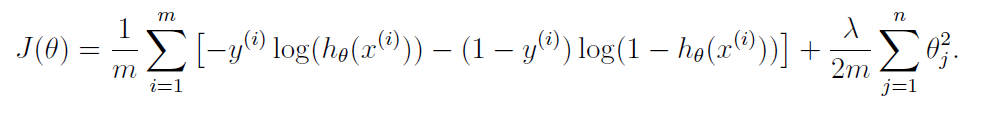

- Regularised cost function

J=1/m*sum(-y.*log(h)-(1-y).*log(1-h))+lambda/(2*m)*sum(theta(2:size(theta)).^2); -

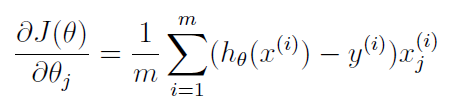

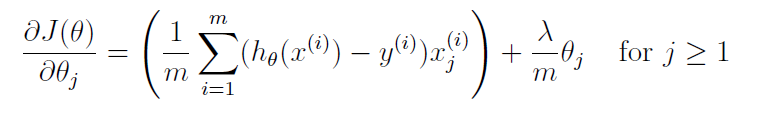

Gradient Descent

- Regularised gradient descent

grad=(X'*(h-y))/m; grad(2:end)=grad(2:end)-lambda/m*(theta(2:end)); - Use fminuc to learn parameters

% Set options for fminunc options = optimset('GradObj', 'on', 'MaxIter', 400); % Run fminunc to obtain the optimal theta % This function will return theta and the cost [theta, cost] = fminunc(@(t)(costFunction(t, X, y)), initial_theta, options); - Predict

p=sigmoid(X*theta); p=p>=0.5;